For decades, Product Lifecycle Management (PLM) has been the backbone of the manufacturing and engineering worlds. It was designed to be the “single source of truth,” a digital vault where every CAD file, Bill of Materials (BOM), and change order lived in harmony. But if we are being honest, for many organizations, that “vault” has started to feel more like a fortress—impenetrable, expensive to maintain, and incredibly difficult to renovate.

As we stare down the barrel of the next decade, the industrial landscape is shifting beneath our feet. Products are no longer just hardware; they are complex systems of software, electronics, and mechanical components. Supply chains are no longer linear; they are chaotic, global webs. In this environment, the rigid, monolithic PLM systems of the early 2000s aren’t just aging—they are becoming a liability.

To survive, PLM must evolve. The future belongs to architectures that are cloud-native and composable. Here is why.

The Death of the “Monolith”

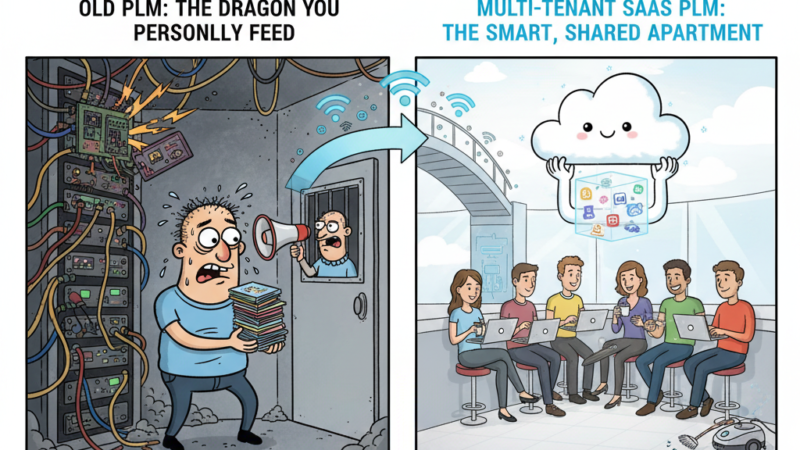

To understand where we are going, we have to look at where we’ve been. Traditional PLM systems were built as “monoliths.” This means the entire application—the database, the user interface, the business logic—was bundled into one massive, tightly coupled codebase.

When you wanted to upgrade a monolithic system, it was a multi-year project involving consultants, millions of dollars, and a terrifying amount of custom code. Because everything was interconnected, changing one small feature could break ten others. As a result, many companies stayed on versions of software that were five or ten years old, terrified to move forward.

This “version lock” is the silent killer of innovation. In a world where a startup can prototype, test, and launch a product in months, legacy companies cannot afford to be held back by their own internal tools.

What Does “Cloud-Native” Actually Mean?

There is a common misconception that “cloud” just means “someone else’s computer.” Many legacy PLM vendors have taken their old monolithic software, hosted it on a remote server, and slapped a “Cloud” sticker on it. This is often called “cloud-washed” or “hosted” PLM.

True cloud-native PLM is built from the ground up to live in the cloud. It leverages the inherent advantages of cloud computing:

- Elastic Scalability: If you have a massive simulation to run or a global team joining a project, the system automatically scales its computing power up or down. You don’t have to buy more servers; the architecture breathes with your business.

- Continuous Updates: Instead of the “big bang” upgrade every five years, cloud-native systems update incrementally. New features and security patches roll out weekly or monthly without downtime.

- High Availability: By distributing data across multiple regions, cloud-native systems ensure that your data is always accessible, even if a specific server farm goes dark.

Without a cloud-native foundation, you are simply paying a subscription fee to manage the same old bottlenecks.

The Power of Composability: The “Lego” Approach

While cloud-native is about where the software lives, composability is about how it is built.

A composable architecture treats PLM features as independent, plug-and-playable modules (often called microservices). Instead of buying a massive, all-in-one suite where you only use 20% of the features, a composable approach allows you to assemble the “best-of-breed” tools for your specific needs.

Imagine if your PLM system worked like a smartphone. If you don’t like the default “BOM management” app, you could swap it for a specialized one that integrates better with your specific electronics workflow. If you need a high-end visualization tool, you plug it in via an API.

This is the antithesis of the “one size fits all” mentality. It acknowledges that a medical device company has vastly different needs than an aerospace firm or a fast-fashion brand.

Why the Next Decade Demands This Shift

If the current system is “fine,” why change now? Because the complexity of the next ten years will be unlike anything we’ve seen.

1. The Rise of the Digital Twin and Digital Thread

The “Digital Thread” is the idea that data should flow seamlessly from the first sketch through to manufacturing, service, and eventual recycling. You cannot maintain a digital thread if your data is trapped in a rigid PLM silos. A composable architecture allows data to move via APIs, ensuring that the “truth” is accessible to every department, not just engineering.

2. AI and Machine Learning

AI is the buzzword of the year, but in PLM, it has practical applications: predictive maintenance, automated part sourcing, and generative design. However, AI thrives on clean, accessible data. Legacy monoliths often have “dark data” buried in proprietary formats. Cloud-native systems are built to expose data to AI engines, allowing companies to turn their history into a competitive advantage.

3. The Modern Workforce

The next generation of engineers—Gen Z and beyond—grew up with intuitive, fast, web-based tools. They expect their professional software to work like their personal apps. If your PLM system requires a 200-page manual and takes 30 seconds to load a search result, you will struggle to attract and retain talent. Flexible architectures allow for better, modern User Experiences (UX).

4. Supply Chain Resilience

If the last few years taught us anything, it’s that supply chains are fragile. Companies need to be able to pivot to new suppliers in days, not months. A composable PLM can quickly integrate with third-party supply chain risk databases or carbon footprint trackers, giving decision-makers the data they need to be agile.

Overcoming the “But It’s Not Secure” Myth

For a long time, the biggest argument against cloud-native PLM was security. “We can’t put our IP on the internet,” was the common refrain.

Today, the reality is reversed. Major cloud providers (AWS, Azure, Google) spend billions on security—more than any single manufacturing company ever could. Cloud-native architectures allow for “Zero Trust” security models, where identity and access are verified at every single step, rather than just relying on a firewall around a building. In an era of rampant ransomware, a professionally managed cloud environment is almost always safer than an on-premise server in a basement.

How to Start the Transition

Transitioning to a flexible architecture doesn’t mean you have to “rip and replace” your entire system over a weekend. That is a recipe for disaster. Instead, the move to a composable future usually follows a three-step path:

- The “Side-by-Side” Strategy: Keep your legacy system for core data but start building new workflows (like sustainability reporting or supplier collaboration) on a cloud-native, composable platform.

- API-First Integration: Ensure that any new tool you buy has a robust, open API. Stop accepting “closed” systems that don’t talk to others.

- Incremental Migration: Gradually move your most painful processes to the new architecture. Over time, the “center of gravity” shifts from the old monolith to the new, flexible ecosystem.

Final Thoughts: The Cost of Doing Nothing

The biggest risk in PLM today isn’t a failed implementation; it’s the slow rot of obsolescence. If your PLM system makes it hard to change, hard to integrate, and hard to see your own data, it is actively working against your business goals.

The next decade will be defined by speed, complexity, and the ability to pivot. Flexible, cloud-native, and composable architectures aren’t just a “nice to have” for IT—they are a strategic imperative for the entire company.

It’s time to stop thinking of PLM as a static vault and start seeing it as a living, breathing ecosystem. The companies that make this shift now will be the ones designing the products of 2035. The ones that don’t? They’ll still be waiting for their monolith to finish loading.